Product discovery models are how teams decide what to build, why it matters, and when to say no. Most teams still ship on opinion. The best teams combine autonomy with evidence, then move with calm precision.

# The map: two axes, four models

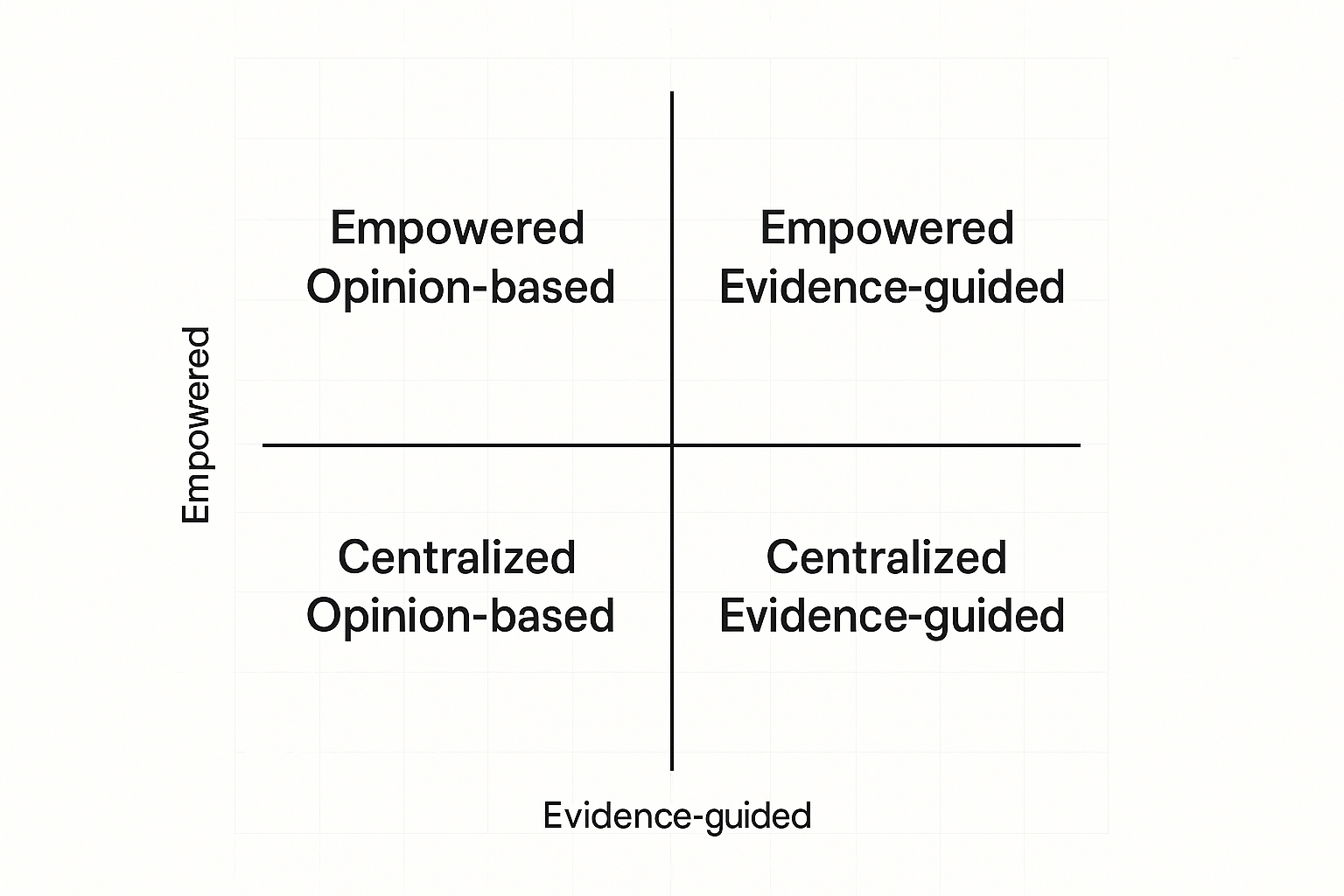

The landscape sits on two axes: centralized to empowered, and opinion-based to evidence-guided. That 2x2 creates four product discovery models that predict how your org learns and decides.

- Centralized opinion-based

- Centralized evidence-guided

- Empowered opinion-based

- Empowered evidence-guided

For a deeper primer on the 2x2, see Itamar Gilad’s overview of discovery models: https://itamargilad.com/product-discovery-models/ (opens new window)

# Model 1: Centralized opinion-based

A small leadership circle sets direction from intuition and precedent. Product teams execute.

- Strengths: fast top-down calls, clear narratives.

- Risks: distance from customers, misallocated effort, weak team ownership.

- Signals: solutions shipped without interviews, roadmap shaped in QBRs, surprise churn.

Takeaway: useful in crisis or high-regulation contexts, but fragile without customer contact.

# Model 2: Centralized evidence-guided

A core research or strategy group runs interviews, surveys, market scans, then hands strategy to teams.

- Strengths: consistent methods, lower duplication, sharper focus.

- Risks: slow feedback loops, brittle when markets shift, teams lack context.

- Signals: polished decks, thin team empathy, late discovery of edge cases.

Takeaway: a solid stepping stone. Pair it with routine customer exposure for delivery teams.

# Model 3: Empowered opinion-based

Product trios explore and decide on their own, but rely mostly on judgment.

- Strengths: speed, ownership, local adaptability.

- Risks: bias, conflicting bets, discovery debt.

- Signals: divergent roadmaps, strong team conviction, light research artifacts.

Takeaway: autonomy helps, evidence still missing. Add structure before scale.

# Model 4: Empowered evidence-guided

Distributed teams run discovery with shared standards: weekly interviews, clear hypotheses, measurable outcomes.

- Strengths: fast learning, coherent decisions, high engagement.

- Risks: requires skills, tooling, and governance to avoid drift.

- Signals: living opportunity maps, tight problem statements, measurable bets.

Takeaway: the target state for most orgs. It trades heroics for craft.

# The risks you must de-risk

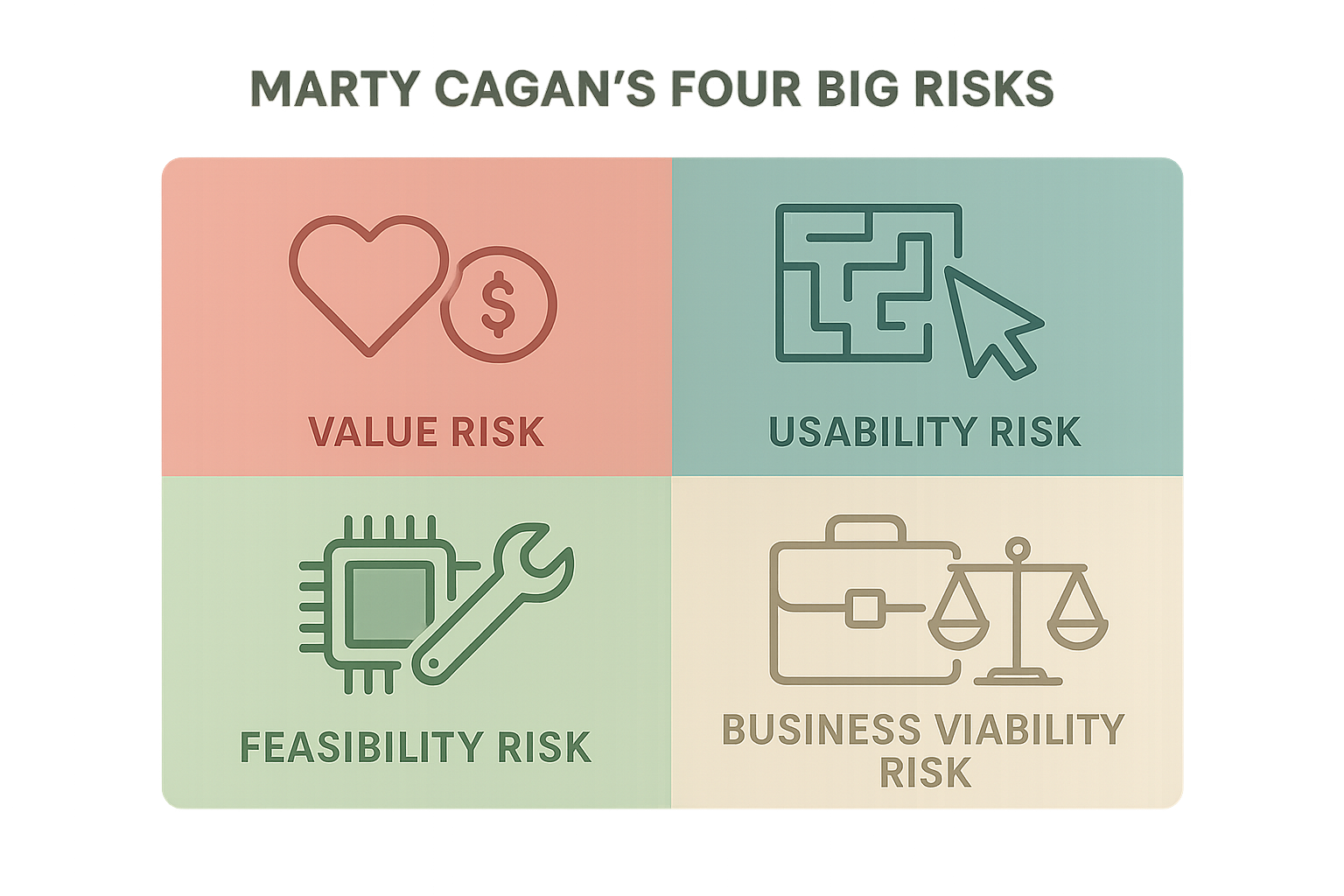

Whatever model you run, you still face four big risks. Marty Cagan’s framing is simple and persistent: https://www.svpg.com/four-big-risks/ (opens new window)

- Value risk: do customers want it, and will they pay or adopt?

- Usability risk: can people figure it out without hand-holding?

- Feasibility risk: can we build and operate it at target cost and time?

- Business viability risk: does it work for sales, legal, finance, and the brand?

Bias pushes teams to overwork feasibility and usability, while value and viability sink launches. Front-load value risk. It is the most expensive risk to get wrong.

# Methods that fit your context

You do not need a single framework. You need a small toolbox you actually use.

- Design Thinking, to build empathy and spark new directions.

- Double Diamond, to separate problem definition from solution delivery. See Productboard’s practical guide: https://www.productboard.com/blog/step-by-step-framework-for-better-product-discovery/ (opens new window)

- Jobs To Be Done, to focus on the job, not the persona. Solid intro at Strategyzer’s roots and beyond, here via Strategyn: https://strategyn.com/jobs-to-be-done/ (opens new window)

- Lean Startup, to validate value with real usage, not sentiment.

- Dual-Track Agile, to keep discovery and delivery moving in parallel. Atlassian’s overview is a crisp starting point: https://www.atlassian.com/agile/product-management/discovery (opens new window)

- Opportunity Solution Tree, to map outcomes, opportunities, and bets.

Principle: choose one method for problem framing, one for hypothesis testing, and one for continuous mapping. Use them every week, not just at offsites.

# Make feedback your operating system

Discovery lives or dies on feedback quality. Spread capture points, then make sense of the noise.

- Channels: interviews, win-loss notes, support tickets, product analytics, NPS verbatims, sales calls, community threads.

- Hygiene: dedupe, tag to themes, link to customers, highlight novelty.

- Cadence: weekly review, not quarterly archaeology.

A practical stack looks like this: a central feedback inbox, tagged by area and opportunity; a simple evidence log tied to hypotheses; and an opportunity map the team keeps current. If you want AI-assisted clustering and insight prompts across channels, try Sleekplan Intelligence to reduce triage time and surface patterns you would miss: https://sleekplan.com/intelligence/ (opens new window)

# The product trio, in the room

Discovery is not a PM solo act. Product, design, and engineering should interview together. Teresa Torres’ work on trios is the reference: https://www.producttalk.org/product-trios/ (opens new window)

- PM listens for outcomes and constraints.

- Designer tracks moments, language, and flow friction.

- Engineer probes feasibility and hidden coupling.

Shared exposure builds shared judgment. It also speeds delivery because context is local, not in a slide deck.

# Lightweight governance, not heavy gates

Autonomy needs boundaries.

- Define evidence thresholds: what proof moves an idea from explore, to test, to invest.

- Clarify decision rights: who decides, who must be consulted, who is informed.

- Set review rhythms: weekly trio reviews, monthly cross-functional check, quarterly strategy reset.

Good governance keeps teams aligned without killing momentum.

# Measure discovery like a craft

You cannot manage what you do not measure, but measure the right things at the right time.

- Process signals: weekly customer touchpoints per team, hypotheses run per sprint, cycle time from question to insight.

- Quality signals: percent of bets with clear problem statements, solution abandonment rate after tests, percent of shipped work preceded by discovery.

- Outcome signals: feature success rate at 30-90 days, outcome velocity, retention or NRR lift linked to validated bets.

Track trends, not vanity counts. Evidence of stopped work is a healthy sign.

# A 90-day plan to move right and down the 2x2

The goal: toward empowered, evidence-guided.

- Weeks 1-2: define a single product outcome, set evidence thresholds, set up a shared discovery log and feedback inbox.

- Weeks 3-4: schedule weekly interviews, start an opportunity solution tree, run one usability test on a prototype.

- Weeks 5-8: ship an MVP for one opportunity, define success metrics, run two iterations based on behavior, not opinion.

- Weeks 9-12: prune your tree, keep what works, stop what does not, present decisions that changed due to evidence.

Deliverables, not theater: problem statements, interview notes, test summaries, and a small number of shipped improvements that moved an outcome.

# Quick answers

- What are the four product discovery models? Centralized opinion-based, centralized evidence-guided, empowered opinion-based, empowered evidence-guided.

- Which model should we use first? Start where you are. If discovery is new, centralize standards, then empower teams as skills grow.

- How many interviews per week? Aim for 4-6 with the trio present. Keep them short, focused, and continuous.

- Do we need a big research team? Not to start. You need shared methods, a clean feedback hub, and a cadence.

# Final note

Great discovery is quiet and disciplined. It respects detail, seeks clarity, and takes responsibility for what gets built. If you combine autonomy with evidence, small choices compound into durable product-market fit.

References worth your time:

- Discovery models by Itamar Gilad: https://itamargilad.com/product-discovery-models/ (opens new window)

- The Four Big Risks by SVPG: https://www.svpg.com/four-big-risks/ (opens new window)

- Product trios by Teresa Torres: https://www.producttalk.org/product-trios/ (opens new window)

- Discovery overview by Atlassian: https://www.atlassian.com/agile/product-management/discovery (opens new window)